Understanding Deployment¶

It is important to understand the deployment process in xpresso.ai. The deployment process is a bit different, depending on what is being deployed, and where.

Deployment of non Big Data components¶

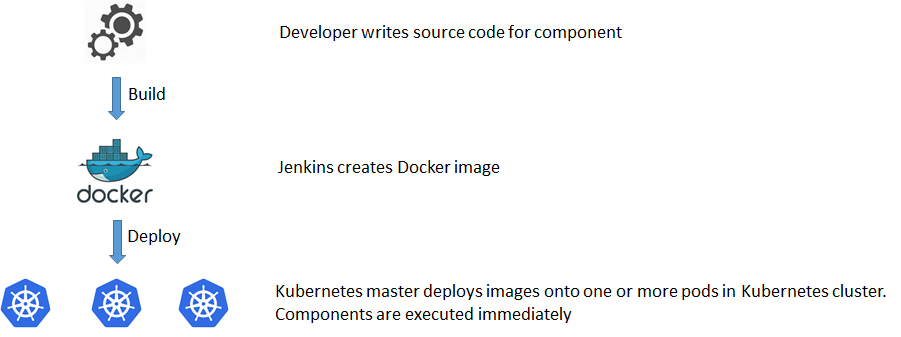

Non Big Data components (i.e., jobs or services written in Java or python) are deployed to Kubernetes, as illustrated in the figure below.

The developer creates a component and populates it with source code

Jenkins converts the source code of the component to a Docker image.

When the component is deployed, xpresso.ai instructs the Kubernetes master to deploy replicas of the image to pods on the Kubernetes cluster (the number of replicas required is specified by the developer).

The components are run immediately.

Developers can check the Kubernetes dashboard to see the pods running, and view the component logs.

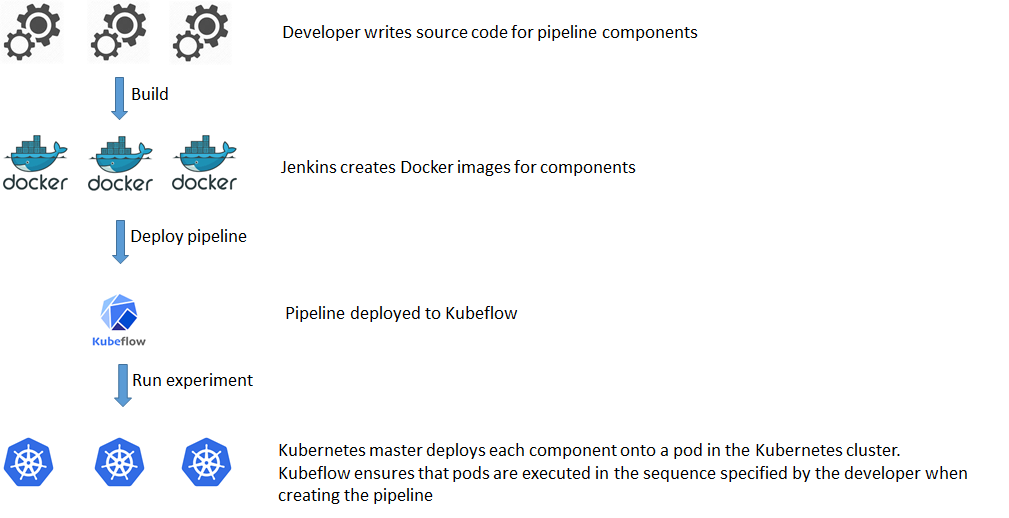

Deployment of non Big Data pipelines¶

The developer creates components (jobs or pipeline jobs) and populates them with source code

The developer creates a pipeline, assigns components to it, and defines the execution sequence

Jenkins converts the source code of each component in the pipeline to a Docker image.

The pipeline has no code associated with it, but is just a virtual container containing the individual components

xpresso.ai deploys the pipeline to Kubeflow, which itself runs on top of the Kubernetes cluster.

Developers can see the pipeline execution graph on the Kubeflow dashboard

When an experiment is run on the the pipeline, Docker images of the components are executed within Kubernetes pods. Kubeflow ensures that the pods are executed in the sequence specified by the developer when creating the pipeline.

Developers can see the experiments running in the Kubeflow dashboard. They can click on individual nodes of the execution graph to see logs of individual components

A non Big Data pipeline has no code associated with it, and hence, does not need to be built. Individual components of the pipeline, of course, need to be built

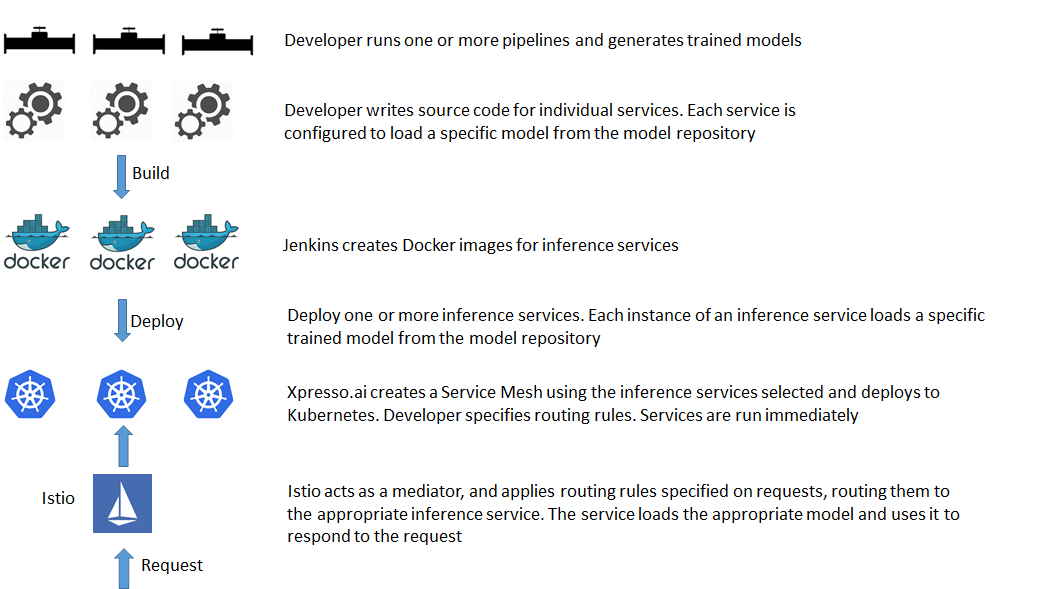

Deployment of Inference Services¶

The developer creates and runs experiments on pipelines, generating trained models. The pipelines should be coded so as to store the trained model in the xpresso.ai model repository

The developer creates inference services and populates them with source code. Each inference service typically works with a specific trained model - it loads the model and uses it to respond to queries

The developer selects one or more inference services, and associates one or more models with each (a model is represented as the output of a specific pipeline run)

The developer deploys the selected inference services, after specifying a routing strategy. Under the covers, xpresso.ai creates a Service Mesh using the inference services selected

xpresso.ai deploys the service mesh to Kubernetes with Istio as a mediator. The individual services in the mes are executed immediately

When a request is made to the service mesh, Istio applies the routing strategy specified by the developer and routes the request to one of the inference services in the mesh. The selected inference service loads the associated model, and uses it to respond to the request (typically with a prediction)

Inference Services are not available on Big Data environments, because services are not supported on these environments

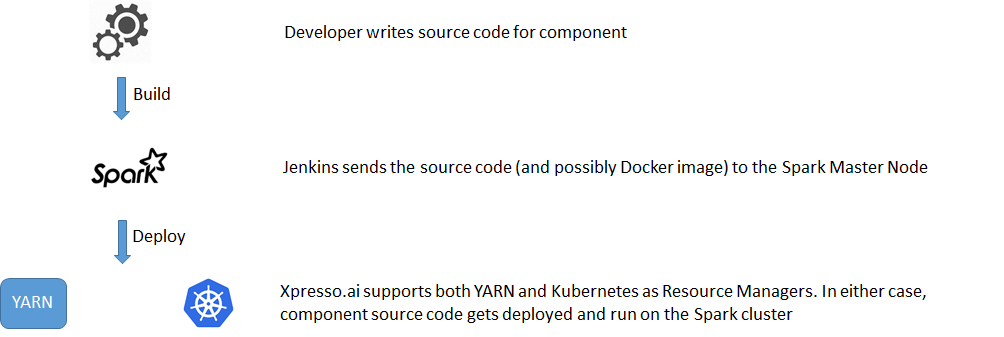

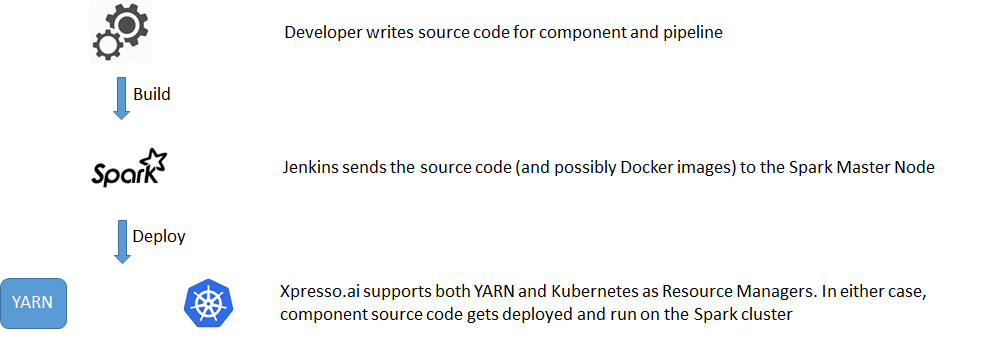

Deployment of Big Data Components¶

xpresso.ai supports both YARN and Kubernetes as the Resource Manager for the Spark cluster. In the case of Kubernetes, the Spark worker nodes are run within Docker images on the Kubernetes cluster

The developer creates a component and populates it with source code

Jenkins deploys the source code onto the Spark Master node (in case Kubernetes is being used as the Resource Manager, a Docker image is also created for the component)

When the component is deployed, xpresso.ai submits the source code to the Spark Master node, which then (through the appropriate Resource Manager) distributes the code to the worker nodes

Developers can review component progress through an Ambari dashboard (if YARN is being used as the Resource Manager), or through the Kubernetes dashboard (if Kubernetes is being used as the Resource Manager)

Deployment of Big Data Pipelines¶

xpresso.ai supports both YARN and Kubernetes as the Resource Manager for the Spark cluster. In the case of Kubernetes, the Spark worker nodes are run within Docker images on the Kubernetes cluster

The developer creates components and populates them with source code

When the developer creates a pipeline, xpresso.ai creates skeleton code for the pipeline. The developer must populate the pipeline with code as well. Thus, Big Data pipelines are not mere containers of components - Big Data pipelines actually need source code, unlike non Bi Data pipelines.

The Build pipeline deploys the source code for the pipeline as well as its constituent components onto the Spark Master node (in case Kubernetes is being used as the Resource Manager, Docker images are created and distributed as well)

When the pipeline is run, xpresso.ai submits the source code to the Spark Master node, which then (through the appropriate Resource Manager) distributes the code to the worker nodes

Developers can review pipeline progress through an Ambari dashboard (if YARN is being used as the Resource Manager), or through the Kubernetes dashboard (if Kubernetes is being used as the Resource Manager)

A Big Data pipeline has code associated with it, and hence, needs to be built, along with the individual components of the pipeline

What do you want to do next?